Medical Image Acquisition Application - Allowing patients to submit images and videos for clinical review.

A secure medical imaging acquisition application that allows patients to upload videos and images to their clinician/health professional for quick diagnosis. Built using serverless public cloud technology, this article explains the design decisions taken while building the project.

Table of Contents

↑Demonstration

To understand this applications clinical workflow, please watch the five-minute video below. The video demonstrates how health professionals use the portal to register an encounter with new patients. The video then explains how the patient receives the initial contact and proceeds to verify themselves. Lastly, the video shows how patients upload images and videos from their mobile devices. It demonstrates how the patient controls what the clinician receives and asserts a consent model for sharing their media files.

This article then proceeds to explain the design decisions taken during the development of this product.

Expand to watch the video demonstration

↑Cloud Architecture

The application leverages Lambda functions to deliver the majority of the functionality. Two Lambda functions provide the web server capabilities for the consultant portal and the patient-facing Progressive Web Application (PWA). These Lambda functions get deployed to an Application Load Balancer (ALB). The ALB uses TLS certificates provisioned by AWS Certificate Manager for the domains we use for this application. The ALB sits behind a Web Application Firewall, providing us some level of protection from rogue actors by Geo fencing the application to users within the United Kingdom.

The patient-facing PWA uses the AWS JavaScript SDK to connect to a Cognito Identity Pool before uploading the media files to a secure S3 bucket. The S3 bucket has two additional Lambda functions configured to trigger when certain file types are uploaded to the bucket.

The first is the video transcoding function which converts videos uploaded in the Apple MOV format to the MP4 format. Converting all videos to the MP4 format ensures that they can be played in the HTML5 video control. The second is an image sizing function that accepts JPG files and calculates the height and width of the image before writing the values back to the MySQL database. Capturing the height and width of the images allows us to display the images responsively within the consultant portal.

The one part of the infrastructure that isn’t serverless is the MySQL database. We already had an existing Aurora database cluster. In this example, it made sense to re-use the existing database infrastructure. The application uses MySQL’s AES encryption to encrypt patient data within the database. The Aurora database cluster also uses AWS AES encryption technology at the database level.

The cloud infrastructure is deployed and managed using the cloud provisioning tool Terraform.

↑Code Repositories

The source code that produces this application is kept safe within a series of AWS Code Commit repositories. This includes the Lambda function source code, database schema and Terraform provisioning scripts. The Lambda function code is compiled and deployed using the AWS Command Line Interface.

↑Lambda Functions

I chose GoLang (Go) as the programming language to develop the server-side code. Go is a strongly typed language and compiles to a native Linux binary. The native Linux binary runs quick on the Lambda execution environment lowering the cold start overhead associated with serverless technologies. I always compile the Go code to use the Amazon Linux 2 runtime environment instead of the default Go 1.x environment. Using the Amazon Linux 2 runtime environment provides us with a stable, secure environment for the longer term.

Lambda Layers

A Lambda layer is an archive containing additional code, such as libraries, or even custom runtimes. When you include a layer in a function, the contents are extracted to the /opt directory in the Lambda execution environment.

I use Lambda Layers to share Go code across different Lambda functions. For example, I have a Go function which builds the HTTP response headers; including a Content Security Policy (CSP). This function is then packaged as a Lambda layer, so that it’s included in all web responses. If I then need to change the CSP I can change it once within the Lambda layer and all functions using it will get the update.

For the video transcoding capability I could have used Amazon Elastic Transcoder. However, after looking at the costs I decided to use a pre-built Lambda layer which includes a static build of FFMPEG for Amazon Linux. Since AWS announced in March 2022 that all Lambda functions could now support up to 10GB of ephemeral-storage, it made sense to use this capability and transcode the videos within a custom function. The video transcoding capability downloads all MOV files uploaded and converts them to the MP4 format, before pushing the MP4 format back up to the S3 bucket.

I’ve wrote a separate article which goes into more detail about how I created this function. You can read it at: Creating a serverless video transcoding Lambda function using Go

↑Logging and Monitoring

The application uses the twelve-factor app methodology for logging. Namely, it prints all error messages to stdout so that they end up within Cloud Watch. I use awslogs, a simple command-line tool for querying groups, streams and events from Amazon CloudWatch logs. This allows me to monitor the applications error logs.

I’ve integrated Cloud Watch real user monitoring to collect and view client-side data about the web applications performance using actual user sessions in near real time.

To monitor S3 disk usage I use the Go AWS API to get CloudWatch Metrics for the S3 bucket.

↑Go

The majority of the Go code uses standard libraries. The following is a list of custom libraries used to deliver specific functionality.

- AWS Lambda for Go

- AWS SDK for Go - used to integrate with S3 and produce pre-signed URL’s to the media files within the consultant portal. The pre-signed URL’s are time-limited to prevent them being shared and remaining accessible.

- One Time Password utilities for Go - used in the registration and onboarding of clinical staff. Generate a QR Code image for easy user enrollment. Validates the Time-based One-time Password Algorithm (TOTP) (RFC 6238):

- Humane Units - used to present the file sizes of the uploaded media files in a user friendly way.

↑Progressive Web Application

The patient facing application uses the Inter font family. Inter is a typeface carefully crafted & designed for computer screens. Inter makes a good choice for web applications viewed on small devices like mobile phones.

The web pages use responsive web design techniques so that they make the best use of the screen real estate.

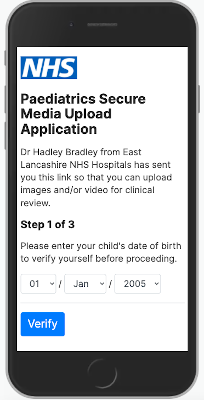

The user needs to verify their date of birth before being able to proceed. The next screen after the verification process allows the patient to answer three consent questions, before being allowed to upload media files.

The media file upload process follows this logic:

- User selects a file from their mobile device.

- The system checks the mime-type of the selected file to ensure it’s either an image or a video. This prevents patients uploading other file types by mistake.

- The system uses this SHA512 library to generate a unique filename for the S3 bucket object.

- If the file size is less than 12MB, then the putObject function is used to uploaded the file to S3.

- If the file size is over 12MB, then file is sliced into 10MB chunks. An S3 multi part upload is initiated with the createMultipartUpload function. Each chunk is uploaded separately using the uploadPart function. The system keeps track of the ETAGS for each chunk uploaded, before completing the process by issuing a completeMultipartUpload command.

- If the upload to S3 was successful then the files details are written to the MySQL database.

AWS SDK for JavaScript

The majority of the S3 uploading functionality leverages the AWS SDK for JavaScript. The SDK authenticates with the Cognito Identity Pool and receives a restricted list of permissions.

We definitely don’t want tech savvy patients being able to use the JavaScript to list and view S3 objects uploaded by other patients. To prevent this we use a restricted permissions policy statement for the media uploading component. The policy statement restricts the permitted actions to those action needed to perform multi part uploads. The policy statement also restricts these actions to the single bucket used for this project. This prevents people attempting to upload files to other buckets within the AWS account.

1{

2 "Version": "2012-10-17",

3 "Statement": [

4 {

5 "Effect": "Allow",

6 "Action": [

7 "cognito-sync:*",

8 "mobileanalytics:PutEvents"],

9 "Resource": "*"

10 },

11 {

12 "Effect": "Allow",

13 "Action": [

14 "s3:AbortMultipartUpload",

15 "s3:GetObject",

16 "s3:ListBucketMultipartUploads",

17 "s3:ListMultipartUploadParts",

18 "s3:PutObject"

19 ],

20 "Resource": ["arn:aws:s3:::${var.aws_s3_bucket_name}",

21 "arn:aws:s3:::${var.aws_s3_bucket_name}/*"]

22 }

23 ]

24}

↑Contact

If you’re interested in this application, and have a requirement to securely capture patient submitted images and videos, then please reach out to me via Twitter. Send me a direct message to @nhsdeveloper and we can arrange a demonstration.